Data Migration from Azure Data Lake Storage Gen1 to Gen2

Microsoft + Azure + Finance

Sector

Sector

Finance

Practice

Practice

Data migration

Technology

Technology

Azure storage (Gen1 and Gen2), Azure Data Factory, Azure Databricks

Our Role

Migrating the whole data from Azure Data Lake Storage Gen1 to Gen2

Our Role

Migrating the whole data from Azure Data Lake Storage Gen1 to Gen2

Project Success

Successful migration from Azure Gen1 to Gen 2 was executed with utmost care for data and functionalities.

Project Success

Successful migration from Azure Gen1 to Gen 2 was executed with utmost care for data and functionalities.

Project Duration

1 month

Project Duration

1 month

With Microsoft's announcement that Azure Data Lake Storage Gen1would be deprecated by the end of February 2024, a reputed finance organization approached SoHo to migrate its substantial data warehouse of approximately 70 terrabytes to Gen2.

The migration required translating existing U-SQL workloads that cannot be directly shifted to Gen2's framework. The SoHo team understood the challenge of preserving the ability to query data after migration, compensating for the absence of U-SQL in Gen2. Due to this complexity, a simple lift and shift was not possible here.

Azure Data Lake Storage Gen2 doesn't support Azure Data Lake Analytics. Attempting to use the Azure portal to migrate the Gen1 account would introduce a risk of breaking the analytics. The team at SoHo had to find a solution to this before migrating.

In addition to the technical challenges SoHo had the critical task of ensuring seamless integration with Gen2 with minimal disruptions for the finance organization.

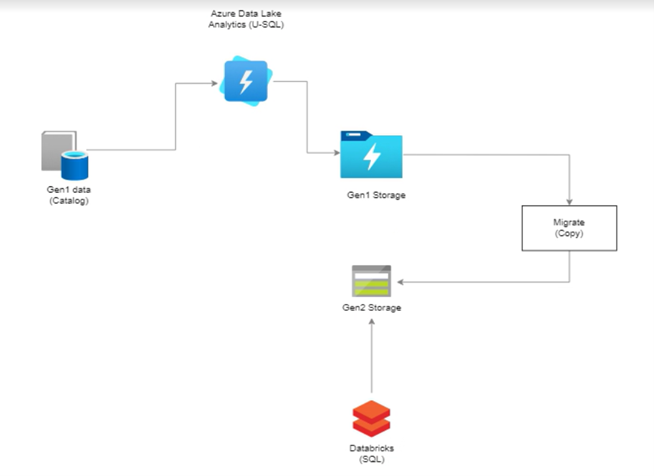

The whole migration was planned in multiple phases:

- Data extraction- As the files stored in Gen1 could not be lifted and shifted, the SoHo team wrote queries using U-SQL to extract the data from the database and restore it.

- Data migration- Using Azure Data Factory, the team copied and shifted around 3000 tables and 1000s of files in a definite structure using pipelines. Azure Data Factory pipelines were designed to migrate data from Gen1 storage to Gen2 storage efficiently.

- Query conversion- Existing U-SQL queries were converted into Databricks SQL queries to enable business users to utilize the migrated data without interruption.

- Catalog creation and schema definition- The SoHo team leveraged Azure Databricks Unity Catalog to create catalogs and schemas. Unity Catalog provides centralized access control, auditing, lineage, and data discovery capabilities across Azure Databricks workspaces. External tables are configured within a database system like Azure Databricks to access and query data stored in Azure Data Lake Storage Gen2.

- Data reconciliation- In the final step, the data of Gen2 was reconciled and compared with Gen1. The reconciliation process was run to verify the migrated data against the source data. The SoHo team used row counts and checksums for each table to ensure data accuracy.

SoHo successfully handled the challenges stemming from the phasing out of Azure Data Lake Storage Gen1. The smooth transition to Azure Data Lake Storage Gen2 ensured uninterrupted data access and analysis capabilities across the client’s business operations.

- The team at SoHo achieved successful migration of 70 terrabytes of data from Gen1 to Gen2 ahead of schedule. This was a big relief for the finance giant.

- The robust catalog in Azure Databricks Unity Catalog allows efficient and flawless data querying.

- Migration and reconciliation processes guaranteed data integrity and accuracy throughout the transition.

- Business users transitioned seamlessly from U-SQL queries to Databricks SQL, leveraging the migrated data effectively.

- Successful migration from Gen1 to Gen2 was executed without ever hindering the ability to query and analyze.

![]() To immediately book a meeting with a SoHo team member, please click this 👉LINK

To immediately book a meeting with a SoHo team member, please click this 👉LINK

Cloud

Cloud Teams Video Conference

Teams Video Conference Compliance Assessment

Compliance Assessment.png) Lucidchart to Visio Assessment

Lucidchart to Visio Assessment